The monolithic architecture designs of yesteryear came with some major design restrictions, when it came to areas such as scalability, agility and maintainability. Your entire application would often be deployed to a single application server running on a single machine. Sometimes, even the database instance would be deployed to the same machine. You’d test everything extensively on a staging machine, with endless repetitions of the deploy, test fix cycle. You’d hope everything would then work when deployed but one small bug could render a whole system broken and result in late nights trying to patch production or roll back the deployment. Sounds like a fun time debugging, doesn’t it?

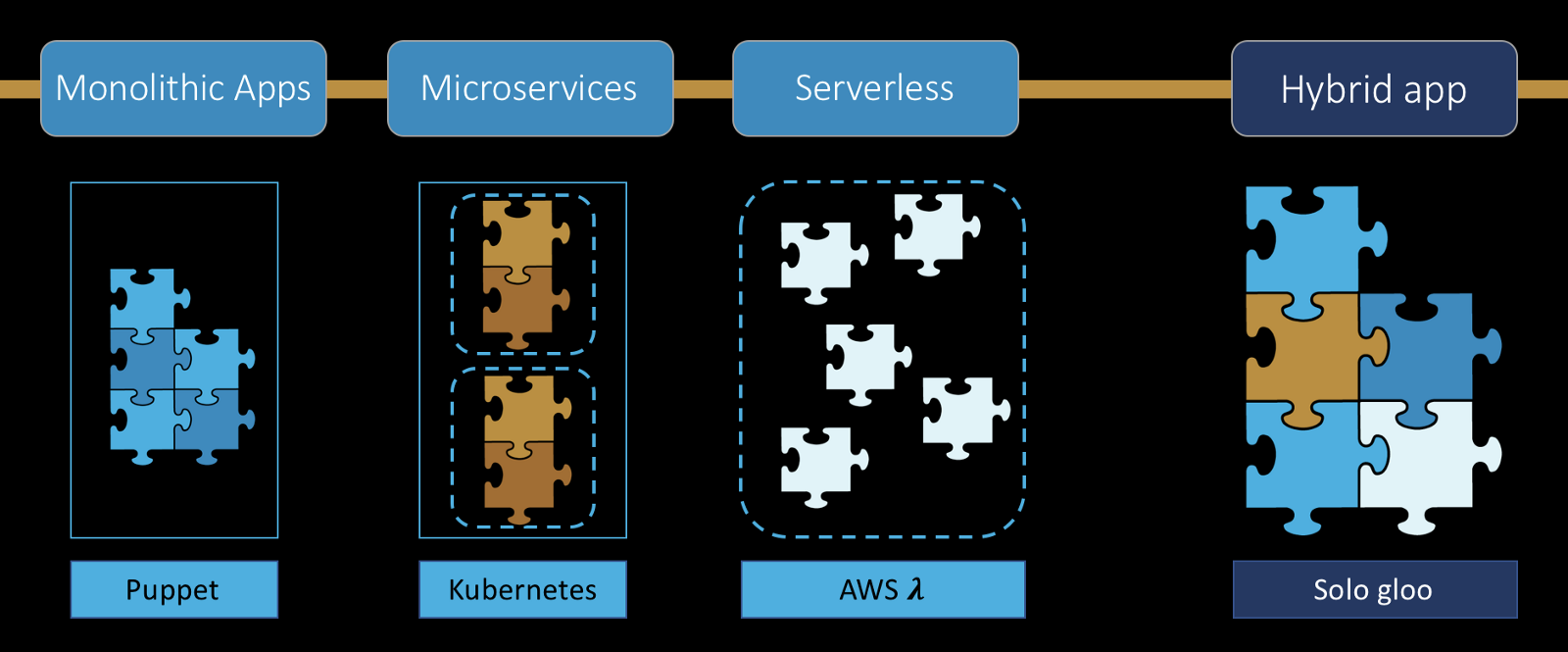

Nowadays, applications have been going down the microservices or serverless design route more as infrastructure provisioning in the cloud is continually becoming more convenient, increasing in performance and decreasing in cost. While we can think of Serverless as simply a subset of the micoservices pattern that uses a particular implementation and deployment style, I believe it is sufficiently differentiatied from earler microservices patterns, such as containerization, to warrant a comparison between the two.

Here at Fathom, we use both serverless and microservices, mainly so we can quickly spin up cloud infrastructure for our various projects and quickly focus on delivering business value at an earlier stage. It’s also cost-effective which comes to a major advantage for us and our customers too.

One approach isn’t necessarily better than the other, otherwise, I can probably end this post right about here. This post is about what the serverless and microservices architectures are, the pros and cons that come with each and the considerations of choosing one over the other based on your requirements.

Word of note, I will mostly be talking about the services in AWS but there are equivalents in most of the other big cloud providers like Google Cloud and Microsoft Azure.

Microservices

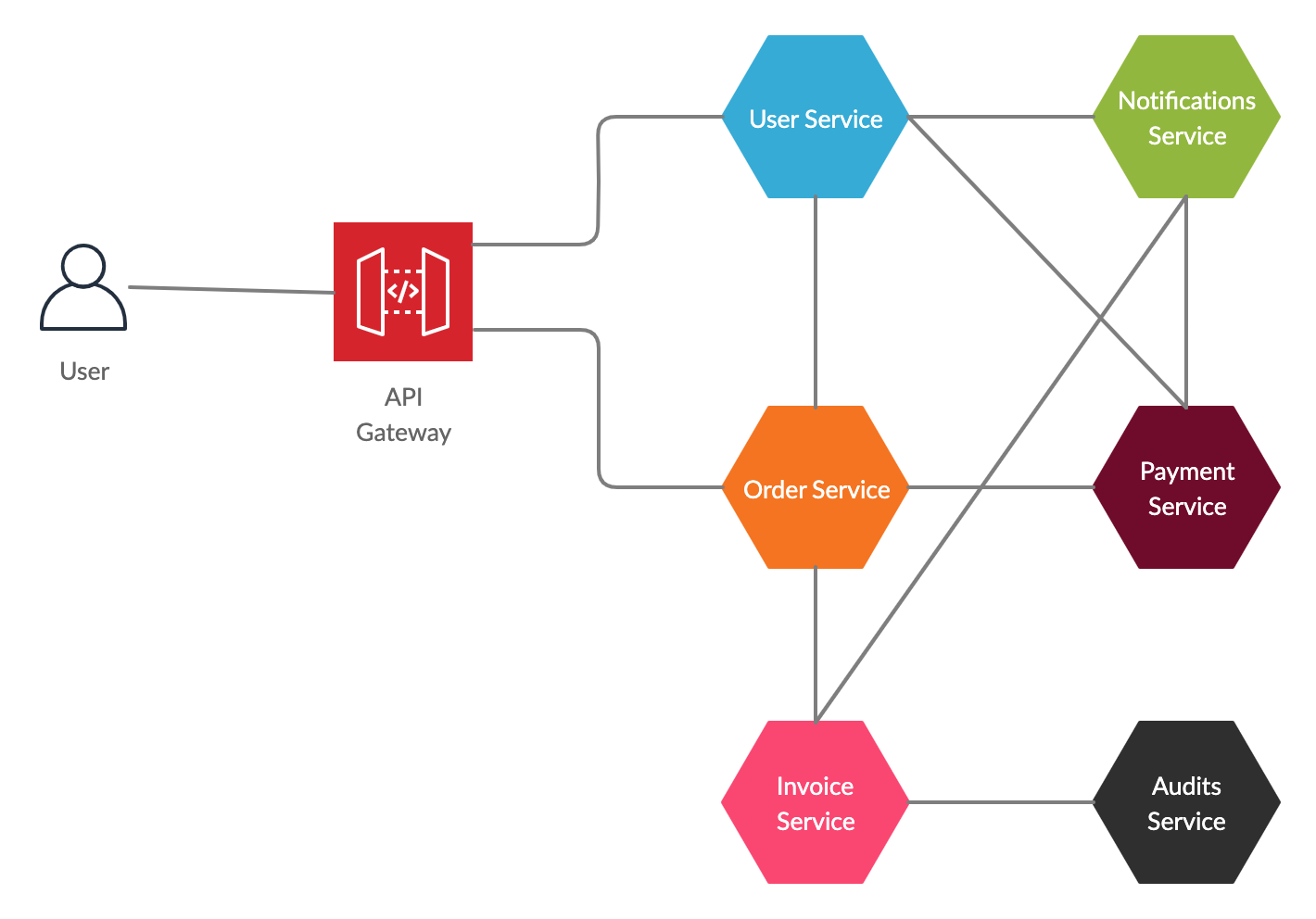

Microservices is a large application that is split and separated into a suite of singular modular pieces. Modules or ‘services’ are loosely coupled and each microservice focuses on a single aspect of the functionality of the application. It can have a simple interface for communication between other services e.g. REST API and each service can have it’s own data store.

Microservices are usually organised and managed in a container environment such as Kubernetes with Docker, although, there are numerous alternatives out there. Containers allow each microservice to be developed, deployed and maintained independently without as much interference to other services or the application as a whole. Each microservice also has an isolated environment and technology stack separate from each other.

Pros

I’ve already pointed out a few above but here are some more to consider:

Quicker deployment - A service can be quickly updated and deployed independently without redeploying a whole application.

Ease of understanding - Since the application is simplified into parts, developers can find it easier to understand how a service works.

Technology design and upgrades - The technology stack of each service can be easily upgraded or swapped out completely without upsetting any other service when using containers.

Easier to find bugs - Any service breaking behaviour only breaks that service so the problem can be quickly isolated whilst leaving the rest of the application usable.

Quicker continuous integration - Since services are split functionally, teams can be organised to focus on one or more services to independently develop and deploy. Continuous integration can be improved and features can be rolled out more frequently and quickly.

Cons

Overhead and more resources - We’ve all heard how containers have become a developer’s great saviour for a long time now. Although less resource-heavy than spinning up a bunch of virtual machines, it still stands that it can take a lot of memory overhead deploying and managing containers.

A distributed system has to be carefully designed - this can add time and complexity along with inter-service communication now needing to be implemented with transactions and operations that can span multiple distributed services.

Testing difficulties - Global integration testing can become quite cumbersome and more difficult than monolithic architectures.

Debugging - As it can be beneficial having a service breaking in only one place, reading logs can be difficult as each service have their own set of logs and jumping between them can bring confusion.

Serverless

In short, serverless allows the developer to focus on the application, not the infrastructure.

Server-side logic is still written by a developer but the code is run on stateless compute containers managed by the cloud provider. So serverless is not ‘serverless’ per se, it just means that the only thing on the server-side you need to worry about is the code. Most of the infrastructure to run your code is provisioned and managed by the cloud provider.

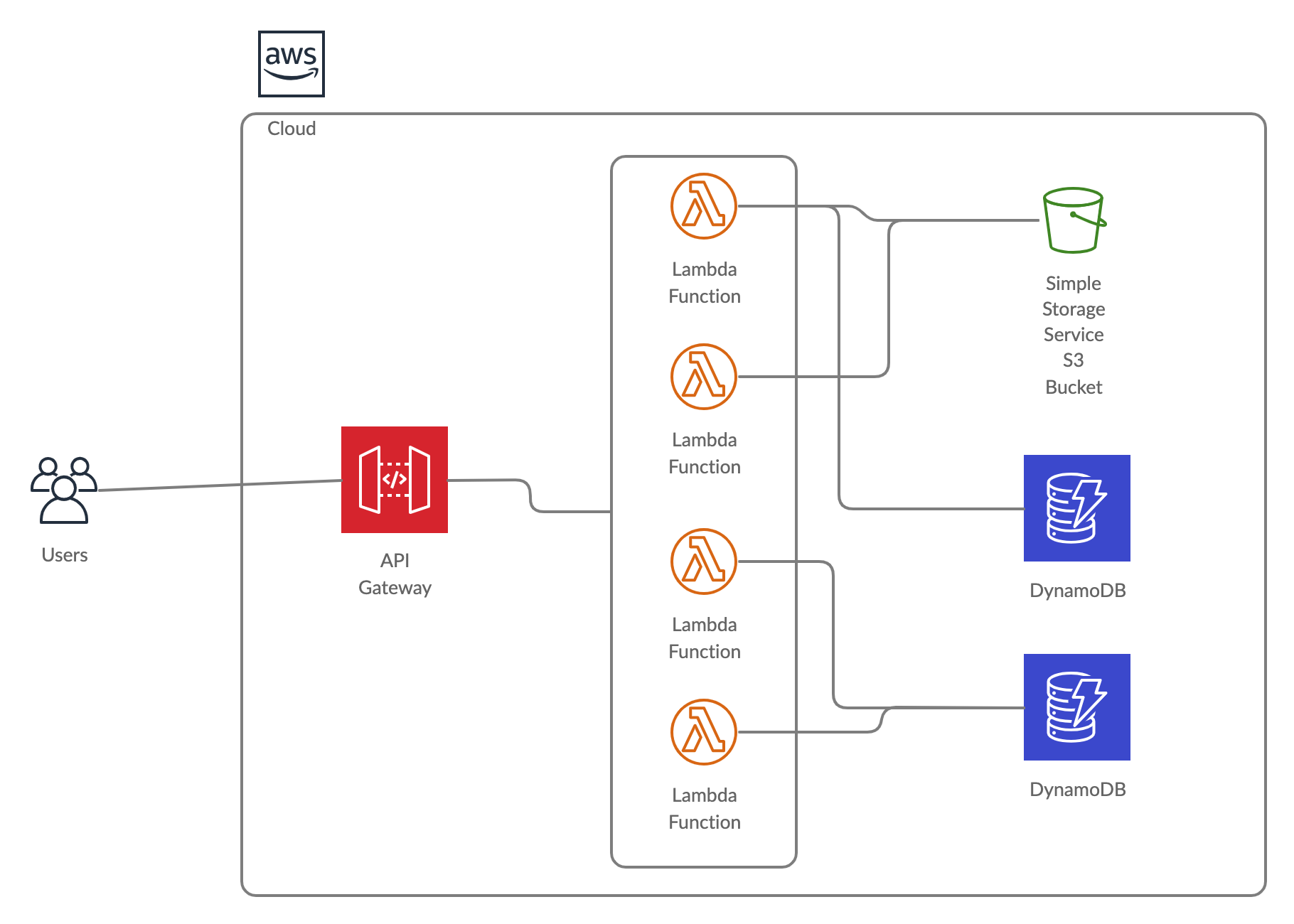

For example, creating an order using AWS - you will write code needed to take the input and pass it into a data store. This code gets uploaded to AWS as a Lambda function with configurations, permissions, environment variables and a runtime. AWS offers multiple runtimes; you can use: NodeJS, Python, Ruby, Java, .NET and Go. When the function gets invoked, an instance of the code is loaded and executed into a micro container somewhere in AWS and is stopped straight after.

The instance of the code is kept in cache for a certain time so it can be quickly loaded and executed again when triggering it. When a function is out of cache and triggered, it can take up to a few seconds to load and execute. These are called ‘cold starts’ and can become problematic; more on cold starts later.

Lambda has endless usages other than just API’s but it’s too much to mention here. APIs can be created with Lambda functions in AWS, usually with an API Gateway instance mapping GET, POST, etc. requests to a bunch of Lambda functions that generally perform CRUD to DBs and other data stores. This is a diagram showing an example:

At Fathom, we lean towards serverless as much as possible when creating APIs for our projects and create infrastructure mostly with Terraform. We create resources from API Gateway instances to the functions themselves with it. Alternatives include AWS Cloudformation, AWS SAM and even full frameworks that do it all and attempt to be cloud provider agnostic like the Serverless Framework.

As our Serverless implementations are, in fact, microservices, all of the advantages listed above for microservices also apply to serverless. Below, we list some of the additionla Pros as well as some Cons.

Pros

It’s cost-effective - You only pay for the execution time in Lambda. With microservices, a cluster is always running e.g. an EC2 instance so overhead costs would be higher.

Not needing to worry about scaling - AWS looks after the scaling. Lambda allows between 500-3000 concurrent executions of a function, region depending.

You can focus more on development - You don’t need to worry about the infrastructure headaches of managing and deploying containers, you just need to upload your code and it’s ready to go.

Cons

Vendor lock-in - You can only run functions on the options the cloud providers give you. There’s restrictions customising the backend infrastructure like CPU power or RAM limits. Microservices doesn’t have this lack of configurability.

Runtime - Each function has a max run-time of around 15 minutes so heavy or time-consuming processing wouldn’t be suitable with serverless.

Cold starts - If a Lambda function hasn’t run for a certain amount of time, it’s instance gets removed from cache. The first time it gets triggered or if it hasn’t been triggered in a while, it can take a couple of seconds longer than usual to run since it needs to be loaded in again.

Local/remote testing - It’s less straightforward to run your functions locally. There are options like Lambda Local, Localstack, AWS SAM and Serverless Framework that can help replicate running your functions on a local environment but they can be fickle in behaviour but will improve in time as the technologies mature. Classic local runtime debuggers with line-by-line stepping is also not possible with Lambda, although Microsoft Azure functions do support remote debugging with VS Code.

Which to use?

There is no right answer here. It really does depend on you use case and some subjective preferences.

For more menial tasks like DB accesses, serverless is almost always preferable and if you need to quickly spin up a working application without much need or desire to manage infrastructure and development needs to start as soon as possible. This is also good when you need to build a lightweight, flexible application that can be expanded or updated quickly.

However, for heavier and time-consuming data processing - microservices can be more suitable as the infrastructure and technology stack can be adaptable to fit the needs. If you need reliable response times in the milliseconds, microservices are more suitable. However, there are some workarounds with the cold start problem - here’s a good explanation. Amazon has recently introduced something called Provisioned Concurrency for Lambda, which may suit some use cases. For most use cases, it will probably end up being more expensive than the conventional microservices pattern.

It doesn’t mean you have to pick one over the other. You can use a combination of the two, even with monolithic architectures. Right tool for the right job, right? A hybrid system can have microservices to do the heavy lifting of processing whilst the more simple tasks can be handled with serverless. Have fun designing that system though.

Conclusion

Microservices and serverless have come a long way from ‘old-timey’ monolithic architectures. They greatly satisfy today’s application requirements of easy scalability, flexibility and continuous integration and the technologies to create them are constantly improving. However, deep consideration is still needed choosing between them when designing infrastructure. It is all about choosing wisely and leveraging the advantages each approach.